Human-centered NLP Fact-checking

Designing AI tools to assist human fact-checkers

The massive scale of intentional disinformation and inadvertent misinformation threaten modern society. To combat this, journalists and fact-checkers perform a key societal function by debunking fake news, online rumors, and conspiracy. While such human fact-checking has proven to be effective in terms of various measures, fact-checking remains a largely manual affair today, limiting the scale of its effective reach and practical impact.

As a part of the UT Good System project (Designing Responsible AI Technologies to Protect Information Integrity), led by Prof. Matt Lease, I collaborated with different colleagues on designing human-centered AI tools to assist human fact-checkers to address this global challenge.

Publications & Presentations

[Journal] Houjiang Liu*, Anubrata Das*, Alexander Boltz*, Didi Zhou, Daisy Pinaroc, Matthew Lease, and Min Kyung Lee. 2024. "Human-centered NLP Fact-checking: Co-Designing with Fact-checkers using Matchmaking for AI." Proceedings of the ACM on Human-computer Interaction, 8(CSCW2) :Article(423), 44 pages. Paper Arxiv

[Accepted to CSCW'25] Houjiang Liu, Jacek Gwizdka, Matthew Lease. 2024. "Exploring Multidimensional Checkworthiness: Designing AI-assisted Claim Prioritization for Human Fact-checkers" Arxiv

The State of Automated Fact-checking

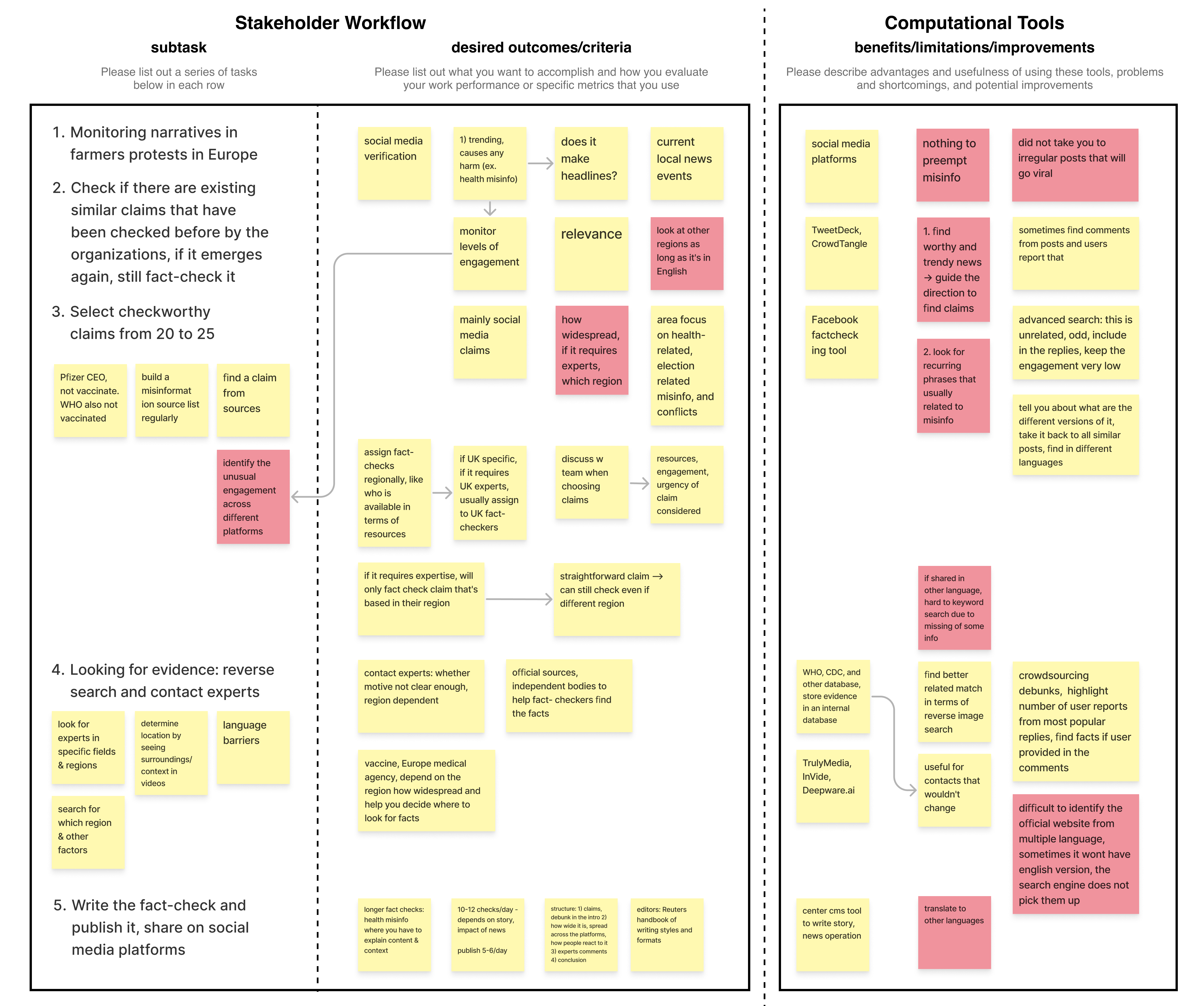

Fact-checking practices exhibit some common patterns and workflows across different organizations. A standard fact-checking process includes five stages: (1) choosing claims to check, (2) contacting the speaker, (3) tracing false claims, (4) working with experts, and (5) writing the fact-check. A growing body of NLP research has sought to automate different aspects of fact-checking. To date, most attention has been directed toward (Figure 1) claim detection, checkworthiness, and prioritizaiton, evidence retrieval, veracity prediction, and fact-check explanations.

In the first study, we review key aspects of NLP-based fact-checking: task formulation, dataset construction, modeling, and human-centered strategies, such as explainable models and human-in-the-loop approaches. We then review the efficacy of applying NLP-based fact-checking tools to assist human fact-checkers. As a result, we found that while there are lots of NLP techniques developed, not many of them have been successfully integrated into existing computational tools to assist human fact-checkers.

This might stem from insufficient alignment with fact-checker needs, practices, and values. In part, simplified problem formulations that permit full automation neglect important aspects of real-world fact-checking, which remains a highly complex process requiring subjective judgment and human knowledge to corroborate claims based on local contexts. This is the research subject to which we now turn.

Matchmaking NLP Capabilities with Human Fact-checking Workflow

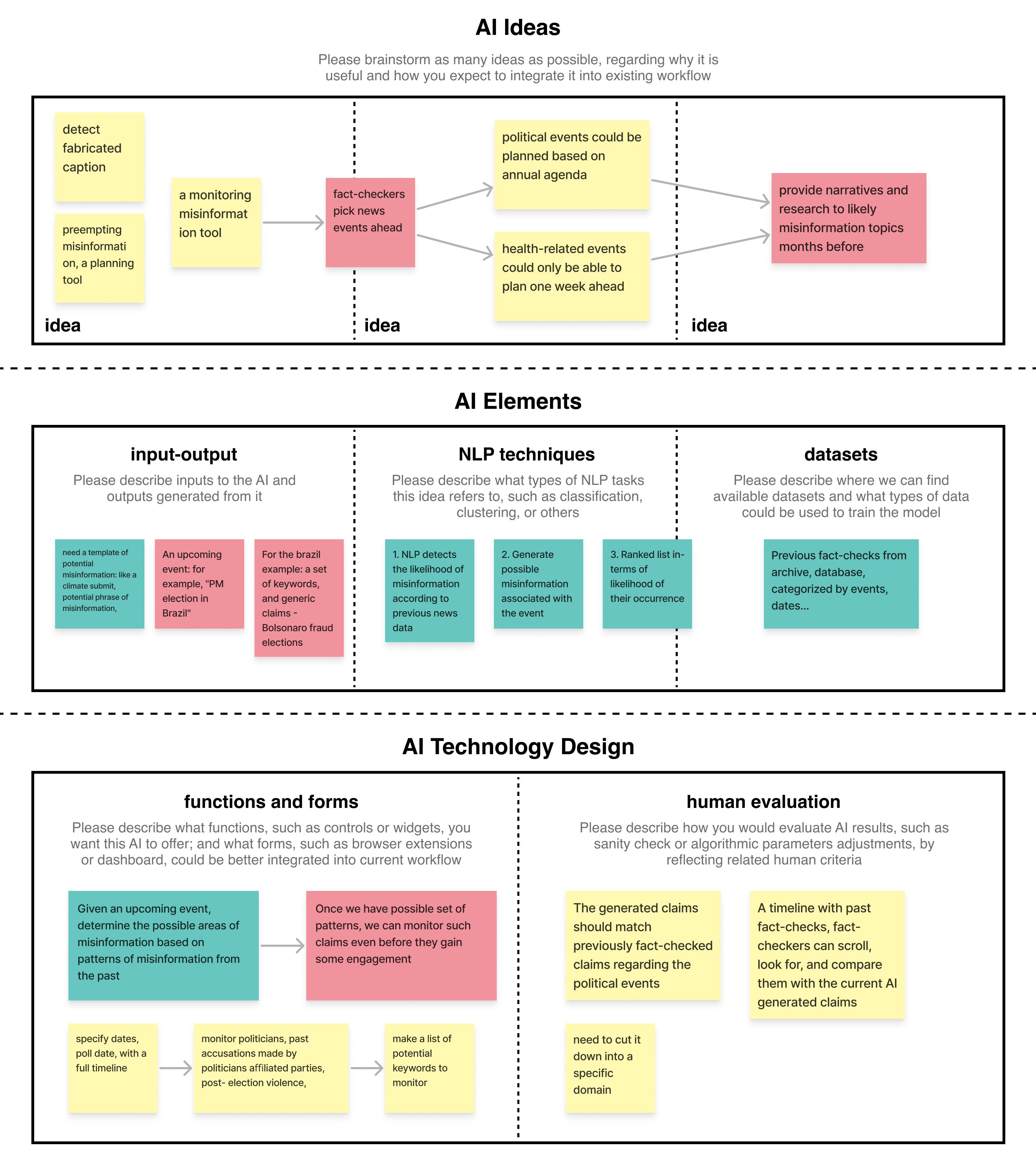

In the second study, we seek to bridge the above gap by empowering stakeholders to brainstorm AI ideas that are both novel and feasible — fact-checkers and AI researchers have deeper-level conversations to explore appropriate design choices with a better adoption of existing AI techniques, so that these design ideas are practically useful for fact-checkers and more likely to be developed in the real world. In particular, we introduce Matchmaking for AI, extending Bly and Churchill’s Matchmaking concept as an AI co-design process, which maps AI techniques to user activities wherein those techniques may have the most impact.

Matchmaking for AI seeks to create "workable ideas" that are both grounded in participant needs and values, as well as informed by their understanding of AI capabilities. To generate such outcomes, we tailor a three-step matchmaking process as follows.

Using Matchmaking for AI, we conducted co-design sessions with 22 professional fact-checkers, yielding 11 novel design ideas (to the best of our knowledge), as the table presented below. Compared to existing NLP fact-checking that focused on claim selection and verification, our ideas point to a broader set of challenges across the fact-checking workflow and fact-checker goals. This table describes the outcome of our co-design process. Additionally, we provide pointers to the literature related to the design ideas that can be used by developers as a starting point.

| Fact-checking stages | No. | Design Ideas | Related AI Research |

|---|---|---|---|

| Forecasting claims | 1 | Forecasting Disinformation | [1, 2] |

| Monitoring claims | 2 | Identifying Broader Disinformation Narratives | [3, 4, 5] |

| 3 | Dynamic Credibility Monitoring of Social Media Users | [6, 7, 8, 9, 10] | |

| Selecting claims | 4 | Finding and Providing Context for Ambiguous Claims | [11, 12] |

| 5 | Personalized Claim Filtering and Selection | [13, 14, 15, 16, 17, 18, 19, 4, 5] | |

| 6 | Personal Bias Warning System for Claim Selection | [4, 5] | |

| Investigating facts | 7 | Human-AI Teaming for Fast Generation of Fact-check Briefs | [20, 12, 21, 22] |

| 8 | Identifying Official Databases and Formulating Queries for Verifying Quantitative Claims | [23, 24] | |

| Writing fact-checks |

9 | Understanding Reader Engagement for Fact-checking Reports | [25] |

| 10 | AI Assistance in Writing Fact-checking Reports | [22] | |

| 11 | AI Assistance in Critiquing and Editing Fact-checking Reports | [26] | |